When I first tackled the problem of duplicate bookings in our flight booking system, the quickest and simplest solution seemed to be using join operations across our key tables. However, as I delved deeper into the issue, the complexity and cost associated with this approach became evident. Initially, we considered the potential of using fuzzy matching algorithms and machine learning to detect duplicates. To truly understand our baseline, though, we decided to explore and benchmark the performance of join operations first.

Through detailed analysis, the limitations of join operations for large datasets and real-time processing became clear. They were not only resource-intensive but also introduced significant latency. This prompted us to look for more efficient solutions that could scale with our growing data needs.

Next, we implemented fuzzy matching algorithms to enhance the accuracy of duplicate detection. These algorithms, combined with machine learning techniques, offered a promising alternative. By continuously training the models on our data, we improved their precision and recall, reducing the incidence of false positives and negatives.

Benchmarking these approaches against traditional join operations provided valuable insights. The new methods outperformed joins in terms of both speed and accuracy, making them a viable solution for our system. This experience underscored the importance of leveraging advanced technologies to tackle complex data management challenges.

As we continue to innovate and refine our approach, I will share detailed articles on my blog about the specific techniques and strategies we employed. These insights will help others facing similar challenges to optimize their systems for better performance and efficiency. Keep an eye out for these upcoming posts to gain a deeper understanding of advanced duplicate detection methods.

Initial Thought: Complex Joins

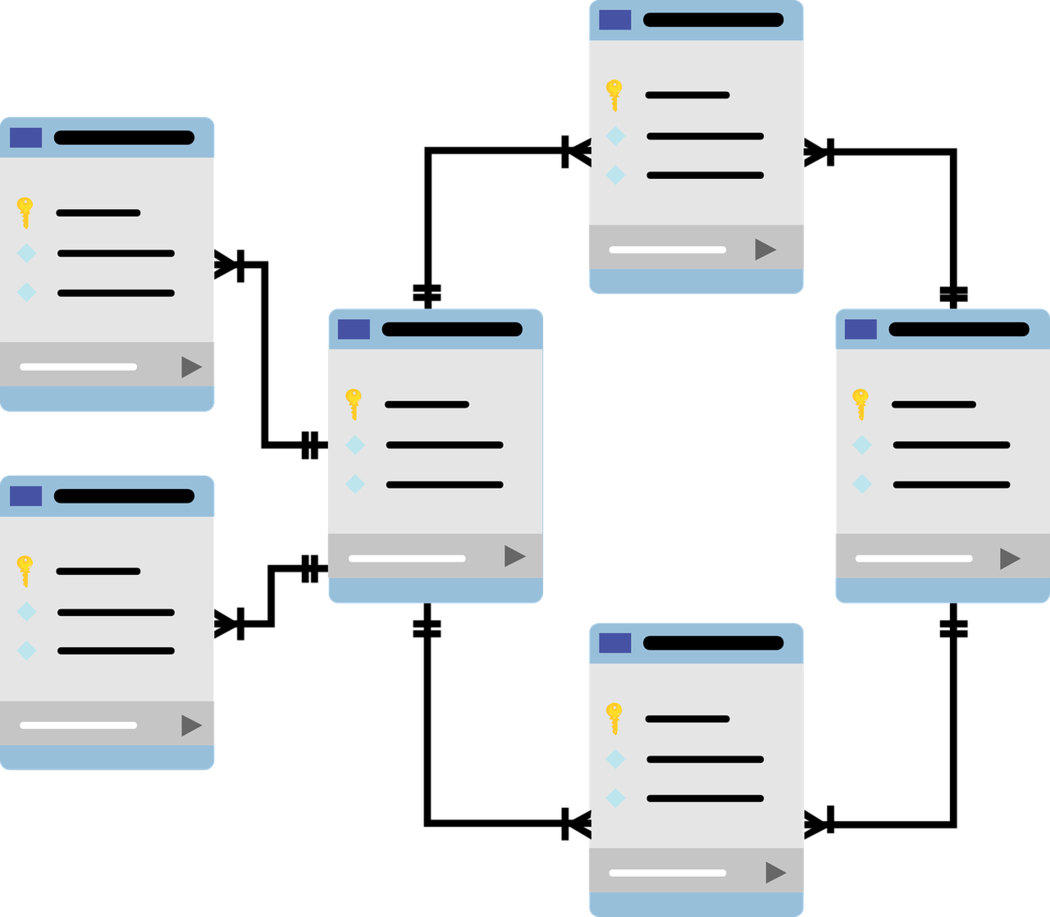

I had three key tables: Bookings, Segments, and Passengers. Each table held critical data about the flights, passengers, and bookings, respectively.

For the purpose of case, here are the sample tables along with their columns.

Bookings:

id, pnr, airlinesCode, bookingCode, paymentType, bookingStatus,

ipAddress, userAgent, baseFare, taxFare, totalFare, discount,

discountedFare, primaryContact

Segments:

id, bookingId, airlines, airlinesCode, airlinesLogo, flightNumber,

aircraft, aircraftCode, originAirportCode, originAirportName,

originTerminalCode, destinationAirportCode, destinationAirportName,

destinationTerminalCode, departureDateTime, arrivalDateTime, baggage,

duration, cabinCode

Passengers:

id, bookingId, eTickets, travellerType, firstName, lastName, dob, gender,

email, contactNumber, frequentFlyerNumber, passportNumber,

passportExpireDate, passportCopy, visaCopy, mealPreference, seatPreferenceTo identify duplicate bookings, I wrote a complex SQL query using joins to compare relevant fields across the Bookings, Segments and Passengers tables. The goal was to detect records that appeared to be the same booking based on certain criteria, such as passenger names, flight details, and booking information.

Implementing Complex Joins

SELECT

b1.id AS booking1_id,

b2.id AS booking2_id,

p1.firstName || ' ' || p1.lastName AS passenger1,

p2.firstName || ' ' || p2.lastName AS passenger2,

s1.flightNumber AS flight1,

s2.flightNumber AS flight2

FROM

Bookings b1

JOIN

Passengers p1 ON b1.id = p1.bookingId

JOIN

Segments s1 ON b1.id = s1.bookingId

JOIN

Bookings b2 ON b1.id != b2.id

JOIN

Passengers p2 ON b2.id = p2.bookingId AND p1.passportNumber = p2.passportNumber

JOIN

Segments s2 ON b2.id = s2.bookingId AND s1.flightNumber = s2.flightNumber

WHERE

b1.bookingStatus = 'confirmed'

AND b2.bookingStatus = 'confirmed';Indexing

To enhance the efficiency of our join operations, I implemented multiple indexes on key fields. These indexes significantly improved query performance by allowing faster data retrieval and reducing the overall query execution time

CREATE INDEX idx_passengers_bookingId ON Passengers (bookingId);

CREATE INDEX idx_passengers_firstName_lastName ON Passengers (firstName, lastName);

CREATE INDEX idx_passengers_frequentFlyerNumber ON Passengers (frequentFlyerNumber);

CREATE INDEX idx_passengers_passportNumber ON Passengers (passportNumber);

CREATE INDEX idx_segments_bookingId ON Segments (bookingId);

CREATE INDEX idx_segments_flightNumber ON Segments (flightNumber);

CREATE INDEX idx_segments_airlinesCode ON Segments (airlinesCode);

CREATE INDEX idx_segments_originAirportCode ON Segments (originAirportCode);

CREATE INDEX idx_segments_destinationAirportCode ON Segments (destinationAirportCode);

CREATE INDEX idx_segments_departureDateTime ON Segments (departureDateTime);

CREATE INDEX idx_segments_arrivalDateTime ON Segments (arrivalDateTime);

CREATE INDEX idx_bookings_pnr ON Bookings (pnr);

CREATE INDEX idx_bookings_airlinesCode ON Bookings (airlinesCode);

CREATE INDEX idx_bookings_bookingCode ON Bookings (bookingCode);

CREATE INDEX idx_bookings_bookingStatus ON Bookings (bookingStatus);Analyzing the Complexity and Cost

While this method was effective in identifying duplicates, it had several significant drawbacks:

- Performance Issues: Complex joins across multiple large tables resulted in slow query performance. For instance, running the above query on a dataset of 1 million bookings could take several minutes, significantly impacting the user experience.

- Resource Intensive: These queries consumed significant CPU and memory resources, leading to high operational costs. For example, the join operation required extensive use of temporary tables and sorting mechanisms, increasing I/O operations and slowing down the database.

- Indexing Costs: To optimize the join performance, we had to create multiple indexes. Maintaining these indexes added to the overhead, both in terms of storage and processing time.

Example Cost Breakdown:

- Query Runtime: On a table with 1 million rows, the join operation took approximately 120 seconds to execute.

- CPU Usage: The CPU usage spiked to 85% during the query execution.

- Memory Usage: The query consumed an additional 2 GB of RAM.

- Index Storage: Indexes on the aforementioned fields increased the storage requirement by approximately 500 MB.

- Server Cost: The additional computational load required more powerful server instances. For example, using AWS EC2 m5.large instances (2 vCPUs, 8 GB RAM) at $0.096 per hour, the cost for running continuous queries could add up quickly. For a high-traffic system requiring multiple such instances, monthly costs could easily reach several thousand dollars.

2nd Thought: Optimizing with Table Partitioning

To address these issues, I implemented table partitioning. This technique divides a large table into smaller, more manageable pieces, each holding a subset of the data. By partitioning the Passengers table based on the bookingId, I could significantly improve query performance.

Partitioning the Passengers Table

First, I created partitions for the Passengers table:

CREATE TABLE Passengers_2023_1 PARTITION OF Passengers FOR VALUES FROM (1) TO (10000);

CREATE TABLE Passengers_2023_2 PARTITION OF Passengers FOR VALUES FROM (10001) TO (20000);

-- Continue creating partitions as neededWith partitioning, the database could quickly narrow down the subset of data to search for duplicates, improving query performance and reducing resource consumption.

Optimized Query with Partitioning

The duplicate-check query was revised to take advantage of partitioned tables:

SELECT

b1.id AS booking1_id,

b2.id AS booking2_id,

p1.firstName || ' ' || p1.lastName AS passenger1,

p2.firstName || ' ' || p2.lastName AS passenger2,

s1.flightNumber AS flight1,

s2.flightNumber AS flight2

FROM

Bookings b1

JOIN

Passengers_2023_1 p1 ON b1.id = p1.bookingId

JOIN

Segments s1 ON b1.id = s1.bookingId

JOIN

Bookings b2 ON b1.id != b2.id

JOIN

Passengers_2023_1 p2 ON b2.id = p2.bookingId AND p1.passportNumber = p2.passportNumber

JOIN

Segments s2 ON b2.id = s2.bookingId AND s1.flightNumber = s2.flightNumber

WHERE

b1.bookingStatus = 'confirmed'

AND b2.bookingStatus = 'confirmed';By targeting specific partitions, the query became more efficient and scalable.

Performance Improvement with Partitioning:

- Query Runtime: Reduced to 20 seconds on the same dataset.

- CPU Usage: Reduced to 60% during the query execution.

- Memory Usage: Reduced to 1 GB of additional RAM.

- Index Storage: Indexes remained the same, but partitioning reduced the overall load on the system.

- Server Cost: With optimized performance, the need for high-cost server instances decreased. Downgrading to smaller instances like t3.medium (2 vCPUs, 4 GB RAM) at $0.0416 per hour could cut costs significantly, bringing monthly expenses down.

Transitioning from complex joins to table partitioning allowed us to handle duplicate bookings more effectively. The optimized system not only improved performance but also reduced operational costs, ensuring a smoother and more reliable booking experience. In the last part, I’ll explore implementing a simple hash-based duplicate checking system, which further enhances efficiency and accuracy. Keep an eye on my blog for more insights and strategies!